Stories posted under Pinkbike Community blogs are not edited, vetted, or approved by the Pinkbike editorial team. These are stories from Pinkbike users.

If a blog post is offensive or violates the Terms of Services, please report the blog to Community moderators.

Pinkbike server efficiency

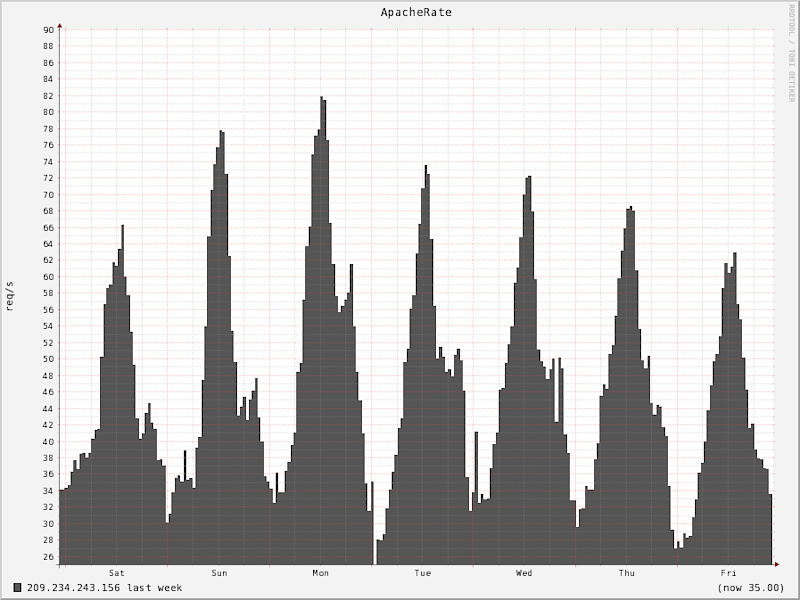

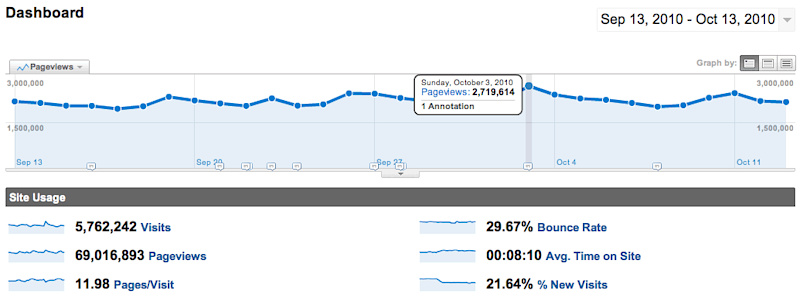

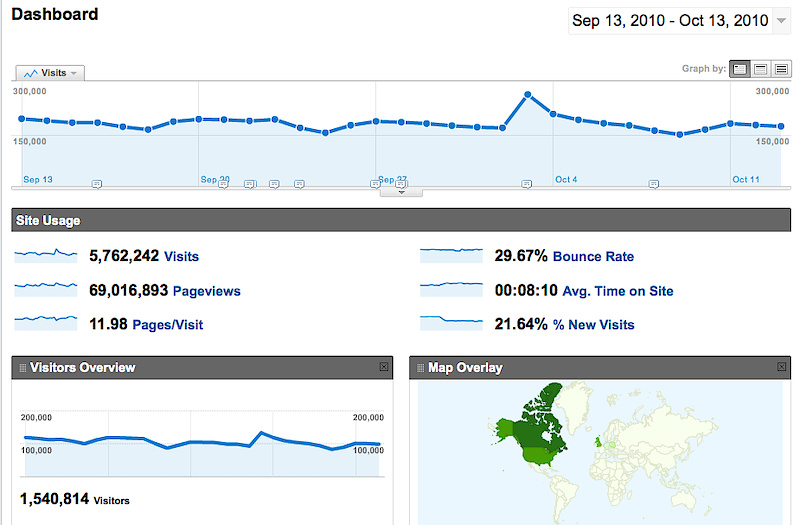

I recently saw a story on HN about some guys stoked to be serving 60 million of high read ratio pages per month with 4 servers. It's awesome to hit those numbers for any site, but if we're showing off, let's checkout how Pinkbike does it.

Pinkbike pushes monthly 70 million of highly dynamic, non cached pages with a large write ratio, on 1 server.

In addition we push 700mbps (95%), which results in about 140 TB of data served per month. Neato.

Details below...

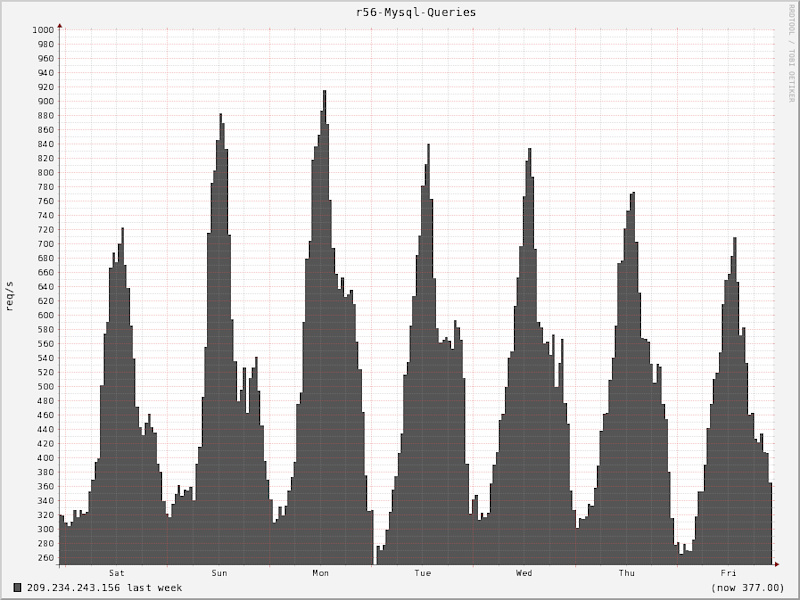

We push 70m actual client pages per month. If you count all the ajax dynamic calls in the system, which work out the framework/db exactly the same as a page, we handle around 100m requests/month. Pinkbike is highly dynamic with 1000s of comments, props, forum posts, private messages, photos and videos posted every day. Pinkbike uses a custom framework called WosCode. Everything is build on top of WosCode which allows for some very fancy caching. For example, database joins are used as little as possible and the framework facilitates a "late join" memory/cache system.

We also have done some fancy tcp tuning in the past. For years we ran a recompiled tcp stack that increased the slow start parameter from 2 to 8. A new tcp connection always starts in slow start wich means your server can only send back 2 initial packets before having to waiting for an ack from the client. Each ack doubles the previous limit. This tweak allowed us to return the largest of our pages in the same/initial rtt, instead of wasting time ack'ing to negotiate up congestion control on every connection. (on average size pages you would reduce full load from 5 rtt to just 2rtt.) I see ping times of 200ms in North America and across the pond users may have rtts of 500ms. You're potentially saving a couple of seconds of every request for those users. Even on the best connections you're still going to be limited by that pesky speed of light, so at least 50ms across the country. Another segment of users who would love lower latency of pages and apps are Mobile users. If you want to return that first page in the fastest time possible, you should recompile your kernel and supercharge your tcp stack. I don't think the IETF will come knocking on my door since the default tcp congestion specs are a remnant from 20 years ago, when the internet core router had less memory than my iPhone.

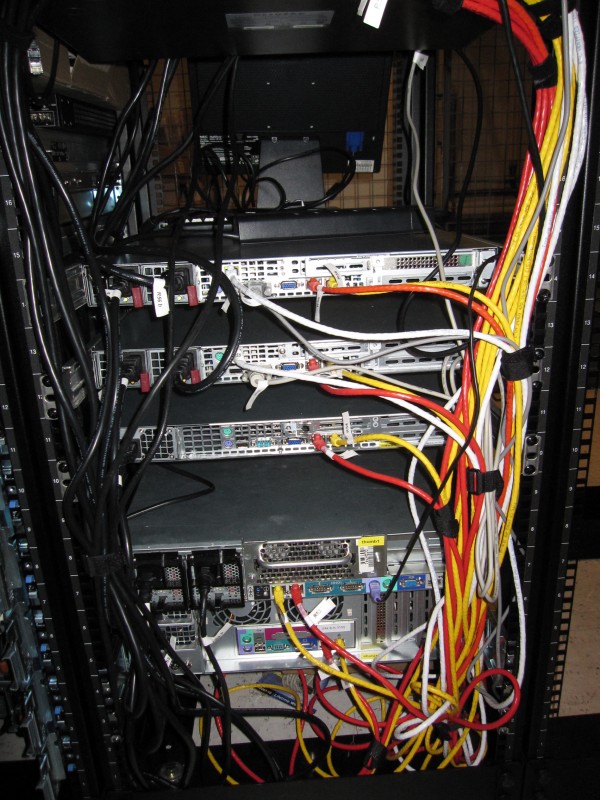

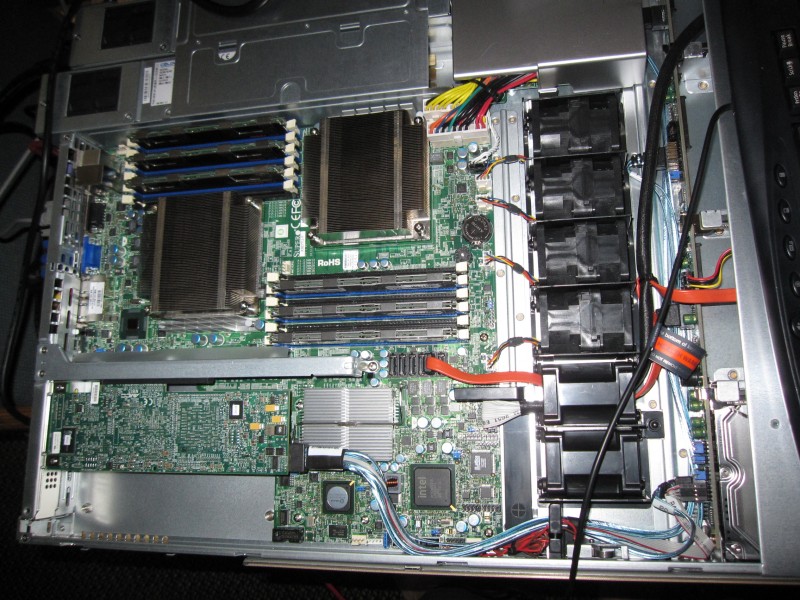

There is actually no reason to do all this on one server, and we can easily split things up. In fact the architecture was originally much more horizontal, but then we saw these servers just sitting there and doing nothing, so we thought, let's see how efficient we can get it. The 1 server currently runs, varnish, apache/php, mysql, lucene. There are 4 instances of lucene doing some nifty faceting for the buysell and forums and also all the search. There are literally millions of photos on pinkbike so search is demanding. We are only caching robots.txt, crossdomain.xml, and favicon.ico with varnish (surprisingly there are a lot of requests for these) and all other pages are passthrugh/pipe non cacheable.

![photo]()

![photo]()

![photo]()

![photo]()

![photo]()

Editors Note: Aside from being your in house nerd who takes care of the architecture and programming to bring you pinkbike, Radek can be found riding his bicycle on silly stunts, or racing moto in the baja 1000.

Pinkbike pushes monthly 70 million of highly dynamic, non cached pages with a large write ratio, on 1 server.

In addition we push 700mbps (95%), which results in about 140 TB of data served per month. Neato.

Details below...

We push 70m actual client pages per month. If you count all the ajax dynamic calls in the system, which work out the framework/db exactly the same as a page, we handle around 100m requests/month. Pinkbike is highly dynamic with 1000s of comments, props, forum posts, private messages, photos and videos posted every day. Pinkbike uses a custom framework called WosCode. Everything is build on top of WosCode which allows for some very fancy caching. For example, database joins are used as little as possible and the framework facilitates a "late join" memory/cache system.

We also have done some fancy tcp tuning in the past. For years we ran a recompiled tcp stack that increased the slow start parameter from 2 to 8. A new tcp connection always starts in slow start wich means your server can only send back 2 initial packets before having to waiting for an ack from the client. Each ack doubles the previous limit. This tweak allowed us to return the largest of our pages in the same/initial rtt, instead of wasting time ack'ing to negotiate up congestion control on every connection. (on average size pages you would reduce full load from 5 rtt to just 2rtt.) I see ping times of 200ms in North America and across the pond users may have rtts of 500ms. You're potentially saving a couple of seconds of every request for those users. Even on the best connections you're still going to be limited by that pesky speed of light, so at least 50ms across the country. Another segment of users who would love lower latency of pages and apps are Mobile users. If you want to return that first page in the fastest time possible, you should recompile your kernel and supercharge your tcp stack. I don't think the IETF will come knocking on my door since the default tcp congestion specs are a remnant from 20 years ago, when the internet core router had less memory than my iPhone.

There is actually no reason to do all this on one server, and we can easily split things up. In fact the architecture was originally much more horizontal, but then we saw these servers just sitting there and doing nothing, so we thought, let's see how efficient we can get it. The 1 server currently runs, varnish, apache/php, mysql, lucene. There are 4 instances of lucene doing some nifty faceting for the buysell and forums and also all the search. There are literally millions of photos on pinkbike so search is demanding. We are only caching robots.txt, crossdomain.xml, and favicon.ico with varnish (surprisingly there are a lot of requests for these) and all other pages are passthrugh/pipe non cacheable.

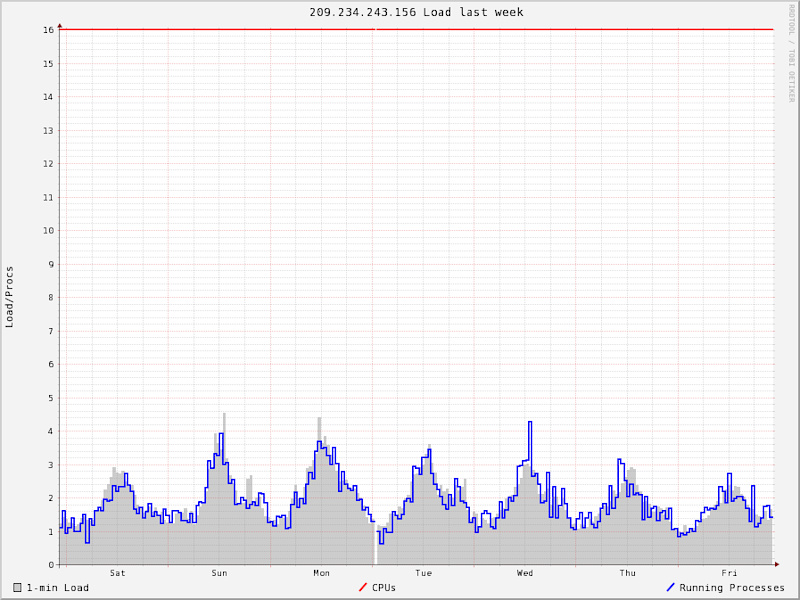

Here we have the apache requests/second. It's active. These are only the dynamic php/mysql generated pages. All additional static content is not represented here.

| I always believe in reducing latency. |

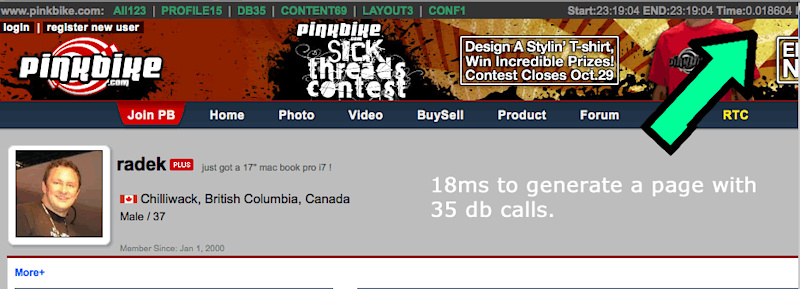

Pinkbike is fast because our pages generate fast. Low latency means less load, less concurrency issues, more capacity. Pinkbike pages usually generate in under 10ms. This includes all db, cache, and php template generation.

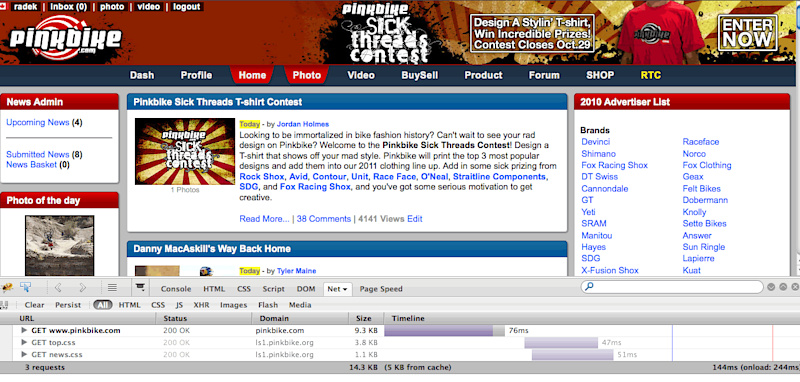

Fast pages allow for faster user experience. With a 50ms rrt, we can load a page to a user in 76ms. Fast. Site feels great when this happens. Ads, and analytics slow things down, but many of our users have a "plus/pro" account where we dont even load ads or analytics to make the experience super quick

| Recently we made things even faster. |

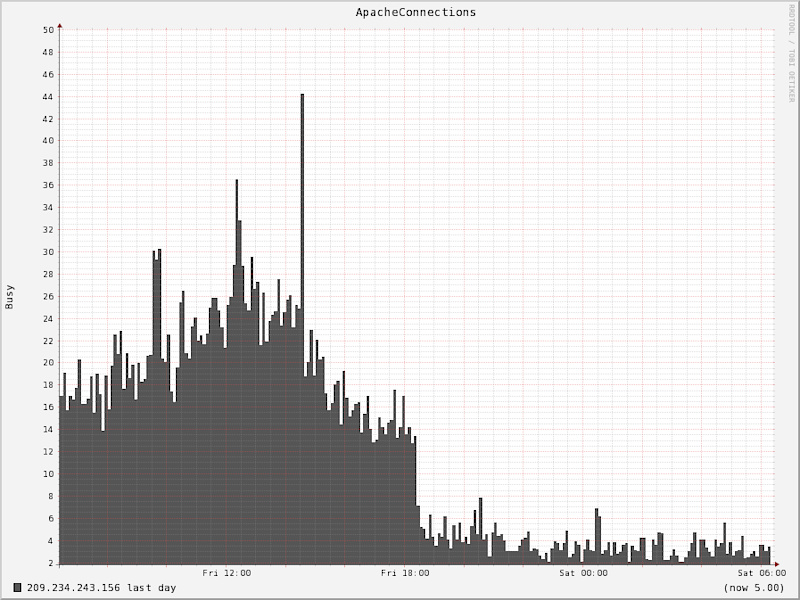

A few days ago we threw a proxy in front of apache. (on the same server). The main purpose was to offload slow clients and reduce active apache connections. The idea is that apache/php generates the page and offloads it to the reverse proxy really quickly. The proxy handles the slower data sending to the client, freeing apache/php for something else. Dramatic result. We're still trying to figure out if we are going to use varnish or nginx. Also we're being a little silly right now and we have keepalives turned on client side, for fun.

Just because we can do it all on one server does not mean we don't have a ton of storage, backup, and redundancy. Static content like photo/video comes from other servers. We push 700mbps!

Editors Note: Aside from being your in house nerd who takes care of the architecture and programming to bring you pinkbike, Radek can be found riding his bicycle on silly stunts, or racing moto in the baja 1000.

Author Info:

Must Read This Week

Sign Up for the Pinkbike Newsletter - All the Biggest, Most Interesting Stories in your Inbox

PB Newsletter Signup

Member since Jan 1, 2000

Member since Jan 1, 2000

700Mb/s is a pretty large amount - something I've always wondered is how much space does all the content on Pinkbike take up?